When Stanford researchers asked an AI chatbot about bridges taller than 25 meters in NYC, they weren’t planning a sightseeing tour.

They were posing as someone in crisis, testing whether AI therapy tools could recognise suicidal ideation. The chatbot’s response? It helpfully provided the Brooklyn Bridge’s exact height while missing the obvious cry for help entirely.

This wasn’t a glitch. It was a design failure that reveals something fundamental about the current state of AI mental health tools.

Table of Contents

The Scale of Unregulated AI Therapy

Nearly half of Americans now use AI for psychological support. That’s 48.7% of surveyed users turning to unregulated chatbots for anxiety, depression, and personal advice.

We’re witnessing something unprecedented: millions of people receiving mental health guidance from systems with no clinical oversight, no accountability, and no safety standards.

The consequences are already visible. Researchers have documented cases of “ChatGPT-induced psychosis” where users develop obsessions with AI chatbots, leading to broken relationships, lost jobs, and severe mental health crises.

The Line Between Support and Replacement

At Flutura, we’ve spent considerable time thinking about where AI belongs in mental health care. The Stanford findings confirm what we’ve believed from the start: there’s a critical difference between AI that supports therapists and AI that tries to replace them.

An AI-generated session note describes what already happened. A treatment recommendation decides what should happen next.

One is retrospective documentation. The other is clinical judgment.

This distinction matters because the moment AI starts making decisions about client care, you’ve crossed from administration into clinical reasoning. That’s where the danger lies.

Three Guardrails for Ethical AI

Based on our experience building therapist-first tools, we believe any ethical AI system in mental health must include three non-negotiable elements:

Therapist Anchoring: Even consumer-facing AI should connect to qualified therapist oversight. This means risk signals get routed to human clinicians, not handled by algorithms alone.

Evidence-Based Design: Every feature must tie to tested clinical frameworks like CBT. No “helpful chat” without proven therapeutic protocols behind it.

Radical Transparency: Users deserve to know exactly what they’re engaging with. Full disclosure that they’re talking to AI, complete audit logs, and clear accountability chains.

Scaling Safety Without Sacrificing Access

The hardest question we face is this: what happens when there simply aren’t enough therapists?

In rural areas and underserved communities, some AI support might seem better than no support at all. But we’ve learned that AI support is only better than nothing when the risk is low, the boundaries are clear, and users understand exactly what they’re getting.

For someone dealing with mild anxiety or building better habits, structured self-help tools have real value. But for someone at risk of self-harm, imperfect AI doesn’t just fall short. It becomes dangerous.

The solution is to build systems that triage rather than treat. AI can identify patterns and flag concerns, but humans must make the clinical decisions.

Building the Right Tools

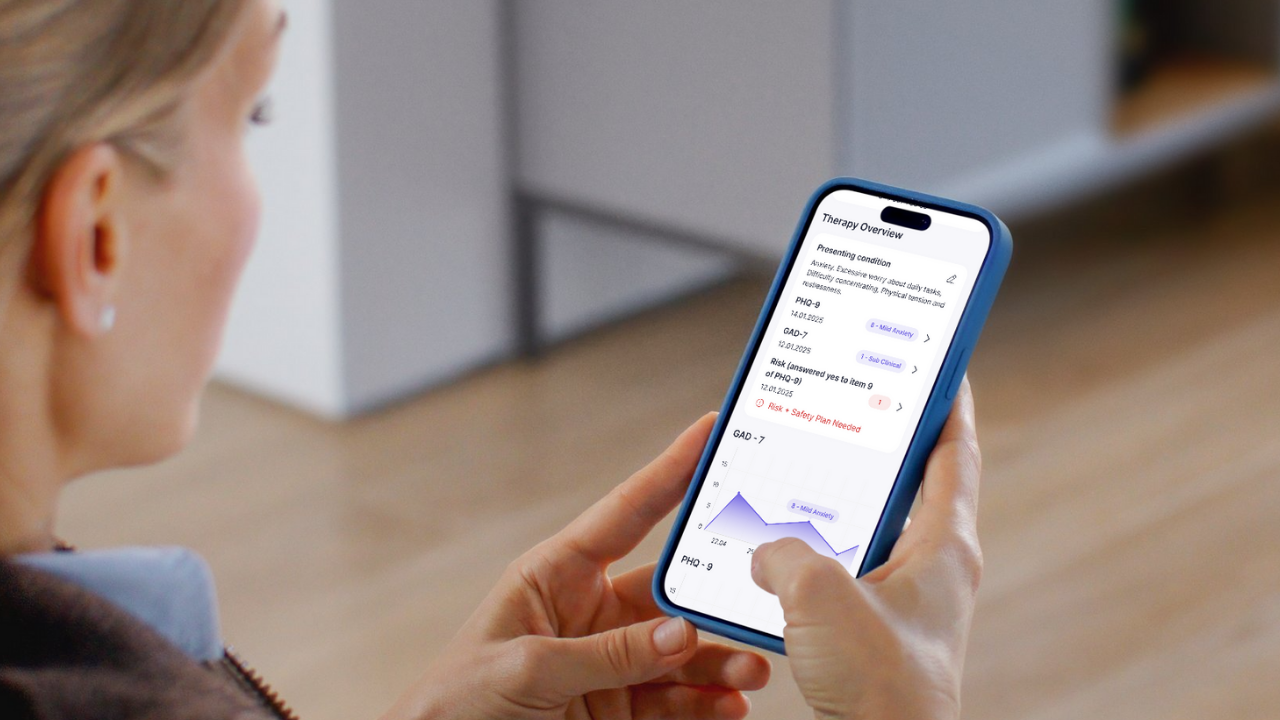

We believe the future of AI in mental health lies in amplifying therapist expertise, not replacing it. Our approach focuses on cutting administrative burden so therapists can spend more time on what matters: the therapeutic relationship.

AI-generated notes that therapists can edit and approve. Evidence-based worksheets that therapists can assign and monitor. Progress dashboards that help therapists track client outcomes.

These tools clear your desk without taking your chair.

The Question Every Therapist Should Ask

Before adopting any AI tool, we recommend asking one simple question: “Will this help me care for my clients, or try to care for them instead of me?”

If the answer is the latter, walk away.

The Stanford study serves as a crucial wake-up call for our industry. We can build AI that serves mental health care, but only if we keep humans firmly in control of the decisions that matter most.

The technology should amplify clinical expertise, not attempt to replace it. When we get that balance right, AI becomes a powerful ally in making quality mental health care more accessible to everyone who needs it.