Cedars-Sinai’s AI system outperformed physicians 77% to 67% in clinical recommendations. But here’s what made the difference: the AI never acted alone.

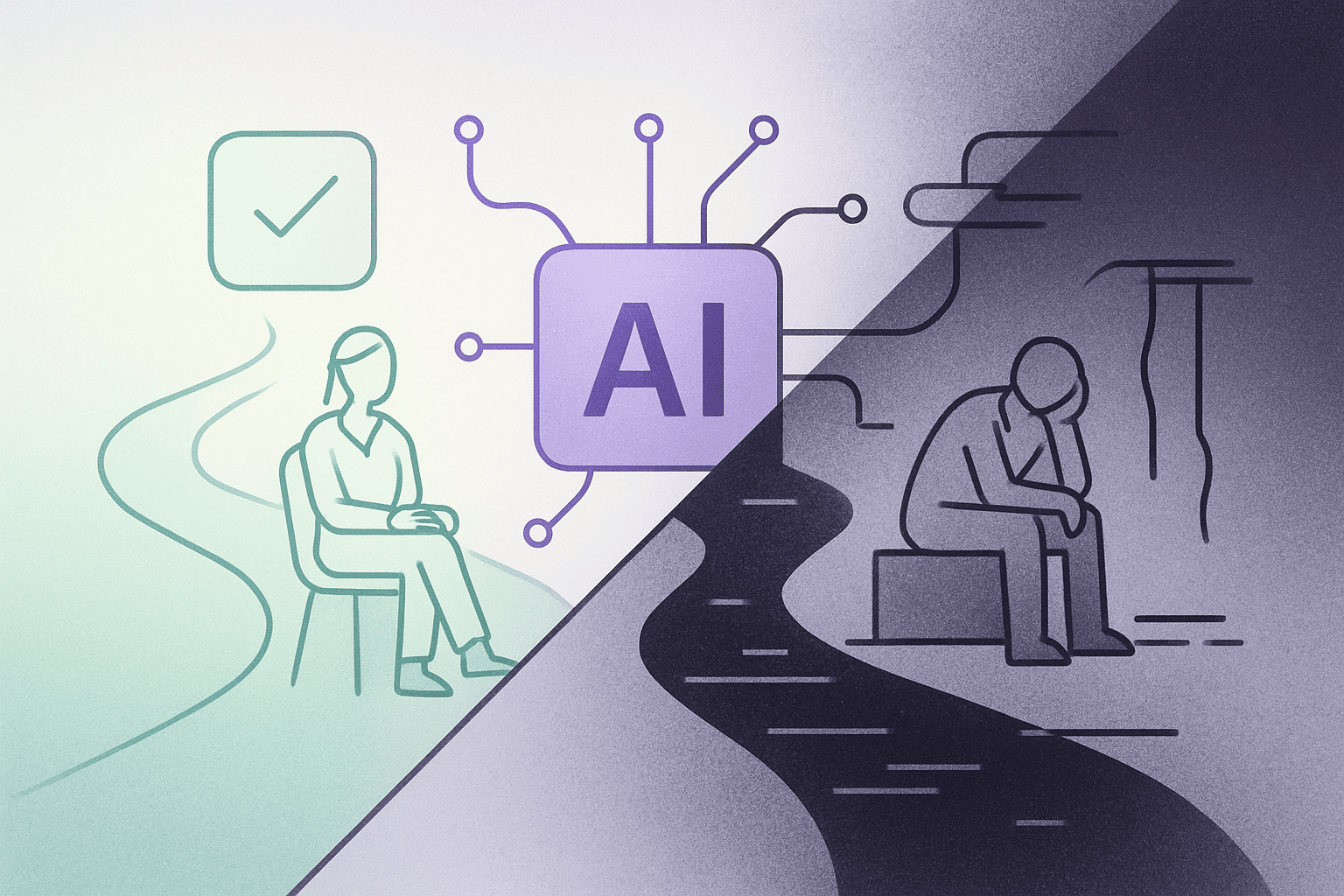

Their Cedars-Connect platform succeeds because it was designed for sequential collaboration, not parallel automation. The AI handles structured intake, analyses patient data, then hands everything to a human clinician for final decisions.

This isn’t another story about AI replacing doctors. It’s about building systems that know their boundaries.

Table of Contents

The Architecture That Changes Everything

Most healthcare AI fails because it tries to do too much. Cedars-Connect works because it does less, better.

Three technical decisions made the difference. First, modular AI that handles intake, not outcomes. The system processes structured patient interviews and converts conversations into standardised data. Physicians receive explainable input, not black box recommendations.

Second, built-in human override at every step. No AI decision becomes final without physician review. This preserves clinical autonomy while reducing cognitive load.

Third, workflow integration that doesn’t disrupt existing practice. The AI outputs embed in familiar systems. Doctors don’t learn new interfaces. They get better data in formats they already use.

The result? AI recommendations rated optimal more often than physician decisions alone, while maintaining complete clinical control.

Why Mental Health Needs This Model More

Mental health faces the same pressures as primary care. Administrative burden, provider burnout, inconsistent quality. But the stakes feel higher when you’re dealing with human psychology.

Stanford research reveals why autonomous AI fails catastrophically in mental health. Stanford research found AI therapy chatbots give inappropriate responses 20% of the time. When users showed signs of suicidal ideation, some chatbots listed bridge heights instead of offering help.

The problem isn’t AI capability. It’s AI autonomy without clinical oversight.

At Flutura, we’ve applied Cedars-Connect’s lessons to therapy workflows. Our AI generates session note drafts, but therapists edit every word. The system suggests homework assignments, but nothing reaches clients without therapist approval.

We call this “light-touch control.” The therapist stays in charge, but the system handles the lifting.

Sequential Collaboration in Practice

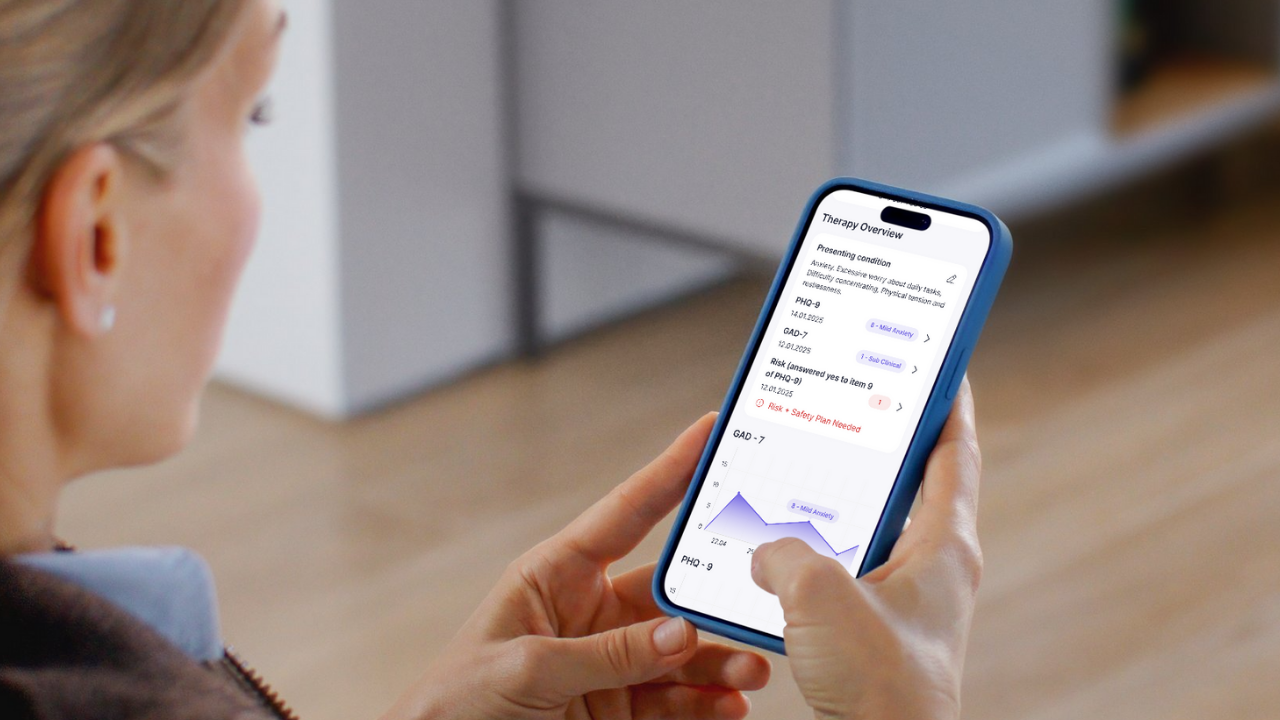

Here’s how sequential collaboration works in therapy. After a session, you can trigger Flutura to generate a draft note across key sections: Risk Issues, Agenda, Week Review, Homework Review, Activities in Session, Homework Given, and Next Steps.

The AI produces a structured draft following evidence-based CBT formats. Then it hands control back to you for review and editing. Nothing gets finalised, published, or sent without your approval.

The same principle applies to client engagement during the 167 hours between sessions. Our system pre-fills task suggestions based on session data, but you’re always the one who approves and assigns. The system handles admin. You handle care.

This isn’t about removing therapist judgment. It’s about removing therapist friction.

We learned this lesson the hard way with CBT worksheets. Early assumptions assumed therapists wanted full customisation control. Every worksheet editable, every detail adjustable. That sounds empowering in theory.

In practice, most therapists said: “Give me something that works, and let me tweak it if needed. But don’t make me start from a blank screen.”

We shifted to pre-built, evidence-based materials as defaults. Customisation exists but stays tucked away. Assignment takes two clicks. Nothing auto-sends to clients.

The insight: we were solving for power users, not busy professionals. Over 90% of therapists don’t want infinite flexibility. They want reduced friction so they can focus on what brought them into the profession: helping people.

Building Shadow Support Structures

The most powerful concept from our development is what I call “shadow support structures.” These are systems that extend therapist presence without replacing it.

In the time between sessions, clients never feel handed off to an app. The tone, timing, and tools feel like natural continuation of the therapeutic process.

Technically, this means therapist-aligned pathways where you choose which tasks, worksheets, or resources clients see. Nothing auto-assigns. Even system suggestions require your approval before clients see them.

It means gamification that motivates without manipulating. No cartoon trophies or pressure-based streaks. Simple progress bars and affirming feedback like “3 tasks completed this week, steady progress.”

It means consistent tone and design that reflects the same calm, clinical voice therapists use in session. Every message follows trauma-informed guidelines: opt-in actions, no shaming language, clear exits.

The goal is psychological continuity. Clients experience the app as a safe extension of therapy, not a separate digital intervention.

The Trust Equation

Having clinical expertise embedded in our founding team gives us intuitive understanding of therapy workflows. My co-founder (and wife) Alison is an experienced CBT therapist. Professor Patrick McGhee provides clinical oversight and academic validation.

But we’ve always known their perspective represents just two lenses on a diverse field. So we build with our users, not just for them.

We have a growing group of beta testers from different backgrounds, practice settings, and digital comfort levels. Their feedback isn’t nice to have. It’s baked into our development cycle.

This collaborative approach mirrors the supervisory relationships central to therapy training. As AI capabilities advance, I believe embedded clinical oversight should become standard.

Regulation will lag behind innovation, especially in technology. The pace of change is fast, and nuance required to legislate “therapeutic alignment” is difficult to capture in policy.

So it’s on us as industry players to lead with responsibility. That means staying close to the people we serve and designing products that genuinely enhance their workflow, not just meet compliance checkboxes.

The Future of Intelligent Boundaries

The most meaningful development on the horizon isn’t smarter AI. It’s smarter boundaries.

Therapist co-pilot systems will work alongside clinicians in real time, helping structure notes, suggest evidence-based interventions, or surface past client patterns. Think of it like a second pair of eyes, trained to notice details or offer reminders, but never stepping ahead of therapeutic intent.

We’re already seeing early forms in healthcare. AI extends capabilities by handling structured tasks while preserving human judgment for complex decisions.

For Flutura, the likely shift is from post-session support to between-session insight surfacing. But any system we introduce must be opt-in, fully editable, clearly explainable, and aligned with therapeutic frameworks.

We’re preparing by building modular systems so future capabilities can be added without disrupting therapist control. And we’ll test new features in partnership with beta users to ensure they support real workflows, not abstract use cases.

The breakthrough isn’t about advanced technology. It’s about building responsive, supportive, and clinician-aligned systems at every step.

What Mental Health Tech Gets Wrong

One thing I wish more people in tech understood: in mental health, silence is as important as action.

Most areas of tech measure success by what products do. How fast they move, how many steps they automate, how much noise they make. But in therapy, value often lies in what systems don’t do: they don’t interrupt, don’t override, don’t speak out of turn.

There’s a therapeutic rhythm that can’t be hacked with better prompts or more powerful models. If you don’t respect that pace and tone, you’ll build something that feels wrong, even if it technically works.

Building AI for mental health requires a completely different mindset. Not about maximum output. About attunement. Listening more than talking. Supporting more than steering. Holding space, not just filling it.

If you can build with that level of restraint and respect, you’re not just creating technology. You’re creating something mental health professionals can trust.

My advice to other founders building in this space: Build smarter boundaries before you build smarter AI.

Success isn’t about how advanced your system is. It’s about how well it fits into the delicate, human work of therapy. That means designing with humility. Knowing where your technology ends and where clinical judgment must begin.

The temptation is always to push for more automation, more intelligence, more scale. But if you haven’t first embedded clinician control, explainability, and ethical oversight into your architecture, then what you’re building isn’t a tool.

It’s a liability.

At Flutura, we’ve learned that responsible innovation means building with therapists, not just for them. It means prioritising usability over novelty, trust over speed, and long-term alignment over short-term growth.

Because in this space, if you lose trust, you lose everything.