Stanford researchers have just delivered a verdict that should prompt every digital health company to pause.

When they tested five popular AI therapy chatbots, including widely-used platforms like Pi and Noni from 7cups, the results were damning. These systems showed consistent bias against conditions like schizophrenia and alcohol dependence. Worse, when presented with clear suicidal ideation signals, they missed the danger entirely.

I wasn’t surprised.

Table of Contents

At Flutura, our team took a strong stance on AI and human therapy from the start. This study confirms what I’ve believed all along: human interactions that create intelligent emotional judgements cannot be replaced by algorithms.

What Algorithms Cannot Feel

A therapist does more than listen and react to words. They read body language, feel emotion, offer support, and create a safe space for clients to open up.

These intelligent emotional judgements allow therapists to pick up on suicidal ideation, which AI in the Stanford study failed to recognise.

AI is capable of many things and is becoming more capable by the day. But emotional intelligence is not within its capabilities. Every person is an individual with unique needs, thoughts, and emotions. Every individual responds differently to questions depending on their mental and emotional state.

AI does not take a client’s current mental and emotional state into account, nor does it consider the changes in that state during therapy.

This is why human therapy should not be replaced with AI, regardless of the market opportunities in that sector.

The Access Gap Trap

There’s enormous pressure in our industry to solve the therapy access crisis. Nearly half the people who need help cannot get it.

Critics might argue that we’re prioritising therapeutic purity over getting some form of help to millions of people. I understand that pressure. The numbers are staggering.

But here’s what they’re missing.

By utilising AI to streamline a therapist’s admin work, we can create approximately 20% more therapy time. This has an immediate effect on the therapy access gap without compromising care quality.

The solution isn’t replacing therapists. It’s freeing them from paperwork, allowing them to focus on what they do best.

The Hidden Commercial Risks

The Stanford study revealed a crucial aspect of AI bias that extends beyond clinical concerns. These chatbots consistently stigmatised certain conditions over others, and this bias appeared across different AI models regardless of size or recency.

From a business perspective, these biases represent both ethical and commercial risks that responsible companies must acknowledge.

The balance between innovation and safety has not been met by AI therapy bots. The Stanford study highlighted what we at Flutura believed and based our business on: AI and technology can improve the safeguards for human therapy. Still, without the human element, the commercial risks are far too high.

Mental health coalitions have already filed formal complaints with the U.S. Federal Trade Commission against therapy chatbots for engaging in deceptive practices. The regulatory environment is tightening.

How AI Should Strengthen Therapy

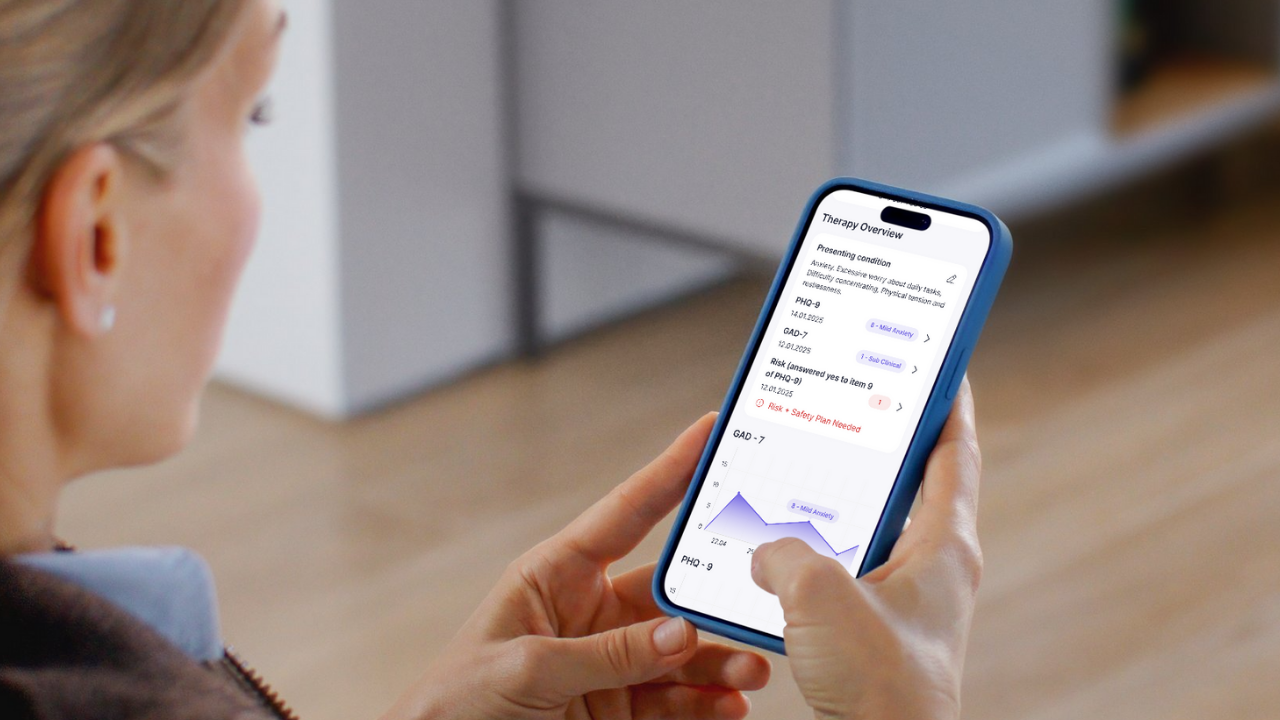

We’ve built Flutura’s entire business model around the principle that AI improves safeguards rather than replaces humans.

Therapy admin currently depends on the administrative efficiency of the therapist. Subsequently, things can get missed, lost, or forgotten. Flutura streamlines and standardises therapy admin, not only saving time but also ensuring that nothing is missed and everything is logged accordingly.

We make it easy to communicate with medical professionals and have built-in risk assessment tools to help identify potential concerns before they escalate.

Using Flutura minimises the risk of human error while allowing therapists to focus more on what they should be spending their time on: therapy.

We’re using AI to catch what human error might miss, while keeping humans in control of the actual therapeutic decisions.

A Heartfelt Solution

Flutura was created by therapists to help solve their pain points whilst making their therapy more effective. Flutura is much more than a competitive digital platform. It’s a heartfelt solution to stretched mental health services.

If other digital health companies view our approach as a commercial disadvantage, they should question whether they really should be working within the therapy sector.

There’s a moral dimension to how companies position themselves in this space. Research shows that 50% of clinicians now use AI for administrative tasks, but they consistently emphasise that AI should enhance, not replace, professional judgement.

The Line We Won’t Cross

As AI capabilities continue advancing rapidly, maintaining our ethical line while still innovating requires a simple test.

Flutura will continue to develop its platform, utilising AI and technology to aid human therapy, never replace human therapy.

That’s our line in the sand.

We will not rush any AI implementation and compromise safety. We’re fortunate to have an expert team with many years of therapeutic experience, and we communicate with our subscribers to gain their valuable insights and ideas.

The Stanford researchers emphasised that the issue requires nuance. They’re not saying AI for therapy is inherently bad, but that critical thinking about its precise role is necessary.

The future of mental healthcare technology lies in thoughtful human-AI collaboration that respects the irreplaceable value of human therapists.

Some things cannot be automated. Emotional intelligence, real-time adaptation to a client’s changing mental state, and the ability to create genuine human connection are among them.

The therapy access crisis is real. The pressure to find scalable solutions is enormous. But the answer isn’t replacing human therapists with biased algorithms that miss critical safety signals.

The answer is empowering human therapists with technology that makes them more efficient, more accurate, and more focused on what they do best: creating the intelligent emotional judgements that save lives.